4 The TMLE Framework

Jeremy Coyle and Nima Hejazi

Based on the tmle3 R package.

4.1 Learning Objectives

By the end of this chapter, you will be able to

- Understand why we use TMLE for effect estimation.

- Use

tmle3to estimate an Average Treatment Effect (ATE). - Understand how to use

tmle3“Specs” objects. - Fit

tmle3for a custom set of target parameters. - Use the delta method to estimate transformations of target parameters.

4.2 Introduction

In the previous chapter on sl3 we learned how to estimate a regression

function like \(\mathbb{E}[Y \mid X]\) from data. That’s an important first step

in learning from data, but how can we use this predictive model to estimate

statistical and causal effects?

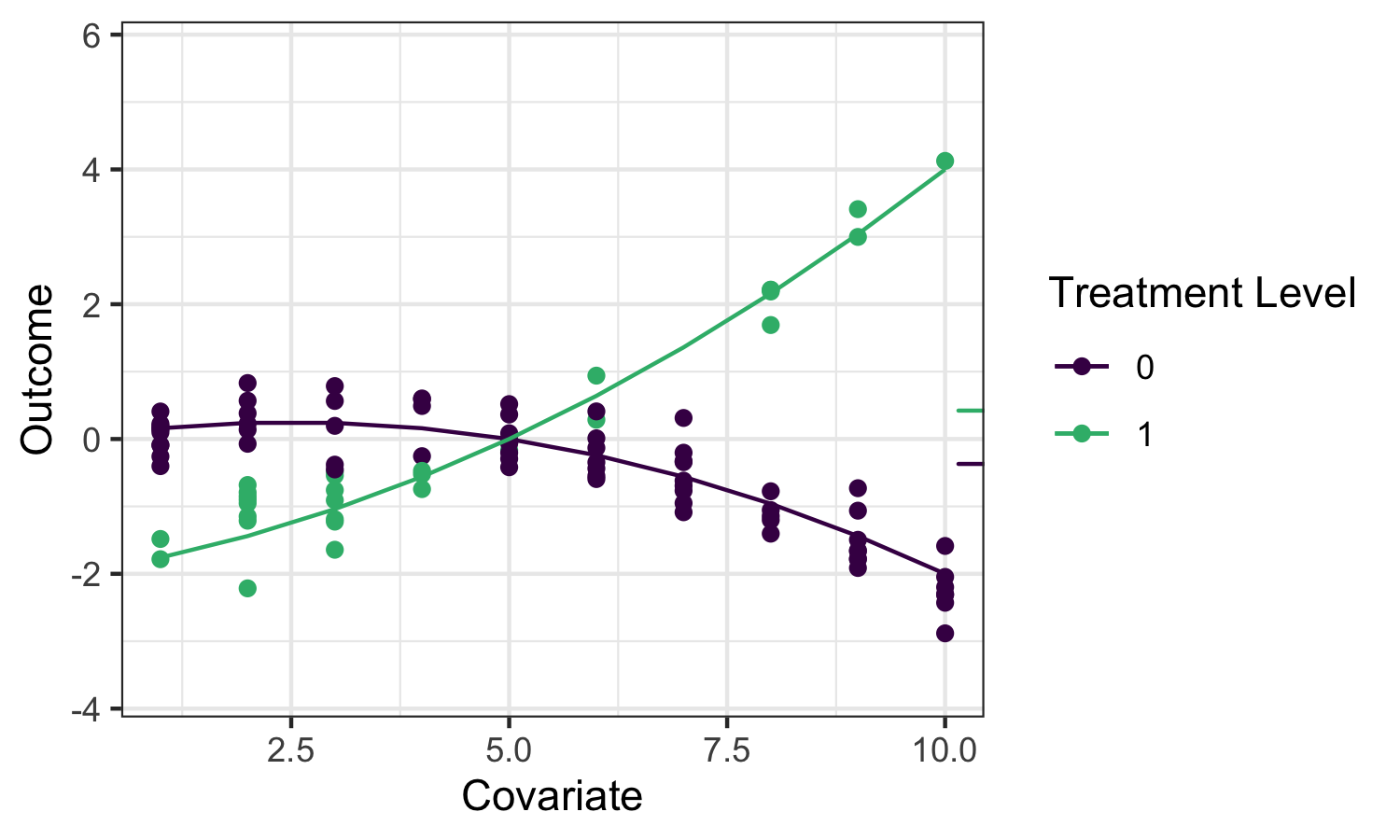

Going back to the roadmap for targeted learning, suppose we’d like to estimate the effect of a treatment variable \(A\) on an outcome \(Y\). As discussed, one potential parameter that characterizes that effect is the Average Treatment Effect (ATE), defined as \(\psi_0 = \mathbb{E}_W[\mathbb{E}[Y \mid A=1,W] - \mathbb{E}[Y \mid A=0,W]]\) and interpreted as the difference in mean outcome under when treatment \(A=1\) and \(A=0\), averaging over the distribution of covariates \(W\). We’ll illustrate several potential estimators for this parameter, and motivate the use of the TMLE (targeted maximum likelihood estimation; targeted minimum loss-based estimation) framework, using the following example data:

The small ticks on the right indicate the mean outcomes (averaging over \(W\)) under \(A=1\) and \(A=0\) respectively, so their difference is the quantity we’d like to estimate.

While we hope to motivate the application of TMLE in this chapter, we refer the interested reader to the two Targeted Learning books and associated works for full technical details.

4.3 Substitution Estimators

We can use sl3 to fit a Super Learner or other regression model to estimate

the outcome regression function \(\mathbb{E}_0[Y \mid A,W]\), which we often refer

to as \(\overline{Q}_0(A,W)\) and whose estimate we denote \(\overline{Q}_n(A,W)\).

To construct an estimate of the ATE \(\psi_n\), we need only “plug-in” the

estimates of \(\overline{Q}_n(A,W)\), evaluated at the two intervention contrasts,

to the corresponding ATE “plug-in” formula:

\(\psi_n = \frac{1}{n}\sum(\overline{Q}_n(1,W)-\overline{Q}_n(0,W))\). This kind

of estimator is called a plug-in or substitution estimator, since accurate

estimates \(\psi_n\) of the parameter \(\psi_0\) may be obtained by substituting

estimates \(\overline{Q}_n(A,W)\) for the relevant regression functions

\(\overline{Q}_0(A,W)\) themselves.

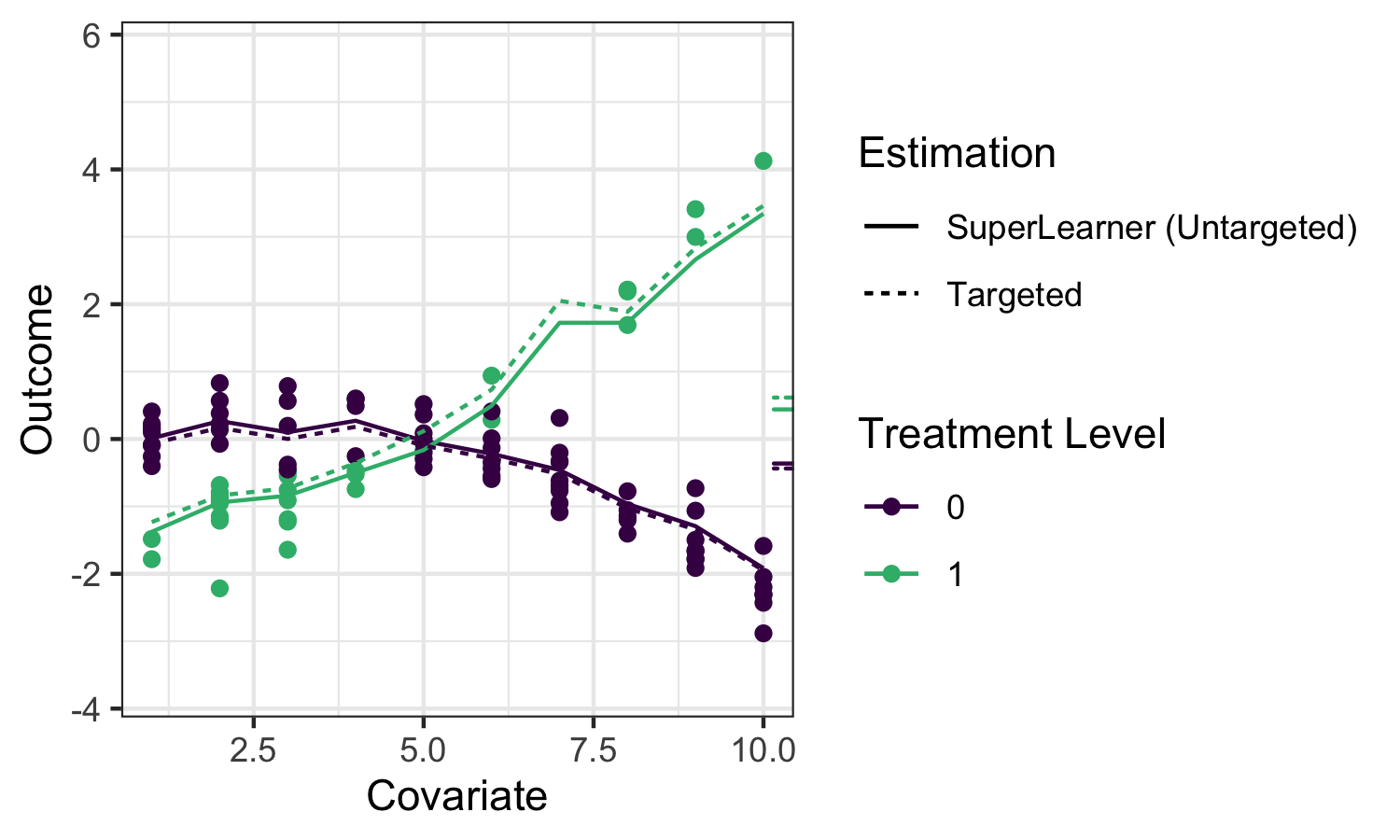

Applying sl3 to estimate the outcome regression in our example, we can see

that the ensemble machine learning predictions fit the data quite well:

The solid lines indicate the sl3 estimate of the regression function, with the

dotted lines indicating the tmle3 updates (described below).

While substitution estimators are intuitive, naively using this approach with a

Super Learner estimate of \(\bar{Q}_0(A,W)\) has several limitations. First, Super

Learner is selecting learner weights to minimize risk across the entire

regression function, instead of “targeting” the ATE parameter we hope to

estimate, leading to biased estimation. That is, sl3 is trying to do well on

the full regression curve on the left, instead of focusing on the small ticks on

the right. What’s more, the sampling distribution of this approach is not

asymptotically linear, and therefore inference is not possible.

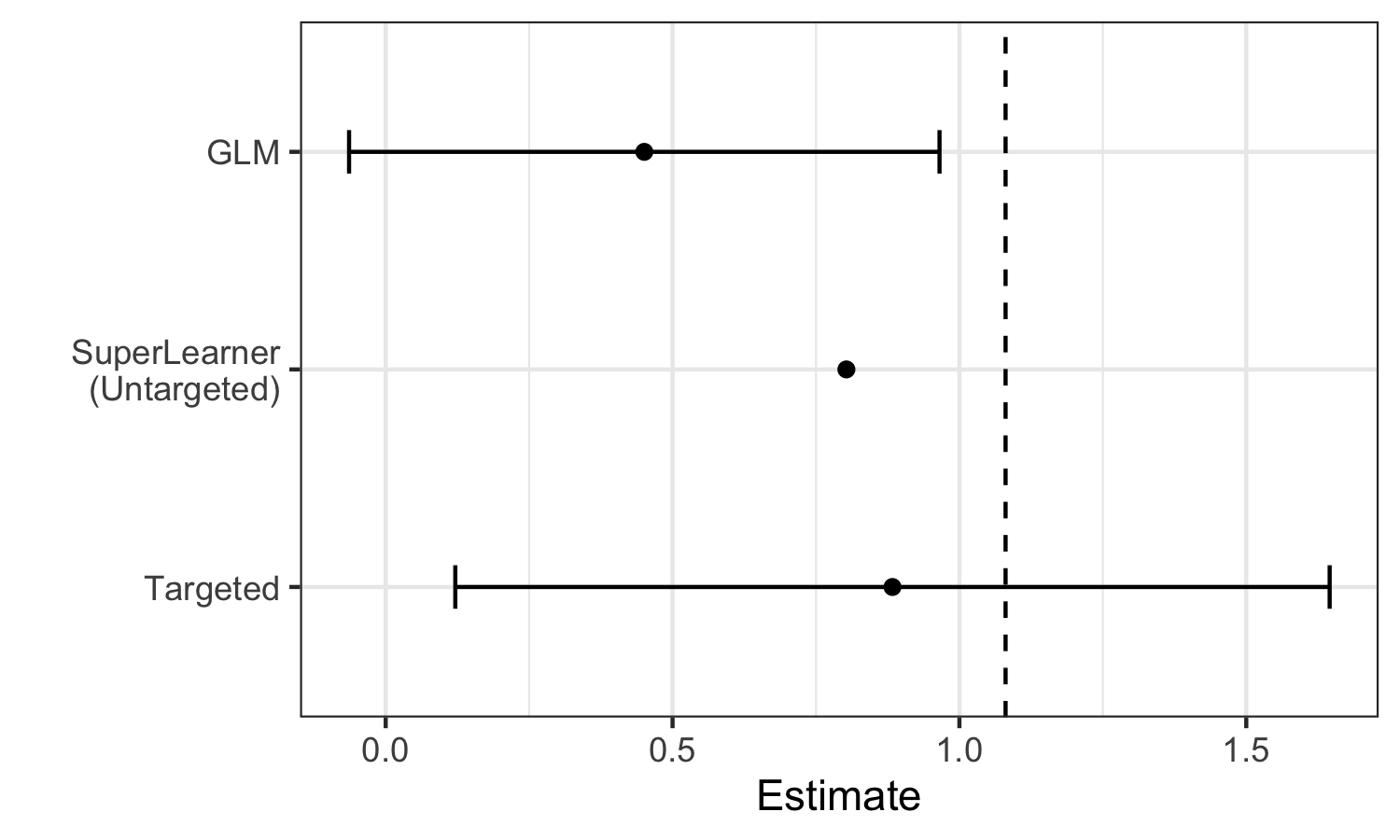

We can see these limitations illustrated in the estimates generated for the example data:

We see that Super Learner, estimates the true parameter value (indicated by the dashed vertical line) more accurately than GLM. However, it is still less accurate than TMLE, and valid inference is not possible. In contrast, TMLE achieves a less biased estimator and valid inference.

4.4 Targeted Maximum Likelihood Estimation

TMLE takes an initial estimate \(\overline{Q}_n(A,W)\) as well as an estimate of the propensity score \(g_n(A \mid W) = \mathbb{P}(A = 1 \mid W)\) and produces an updated estimate \(\overline{Q}^{\star}_n(A,W)\) that is “targeted” to the parameter of interest. TMLE keeps the benefits of substitution estimators (it is one), but augments the original, potentially erratic estimates to correct for bias while also resulting in an asymptotically linear (and thus normally distributed) estimator that accommodates inference via asymptotically consistent Wald-style confidence intervals.

4.4.1 TMLE Updates

There are different types of TMLEs (and, sometimes, multiple for the same set of

target parameters) – below, we give an example of the algorithm for TML

estimation of the ATE. \(\overline{Q}^{\star}_n(A,W)\) is the TMLE-augmented

estimate \(f(\overline{Q}^{\star}_n(A,W)) = f(\overline{Q}_n(A,W)) + \epsilon \cdot H_n(A,W)\), where \(f(\cdot)\) is the appropriate link function (e.g.,

\(\text{logit}(x) = \log\left(\frac{x}{1 - x}\right)\)), and an estimate

\(\epsilon_n\) of the coefficient \(\epsilon\) of the “clever covariate” \(H_n(A,W)\)

is computed. The form of the covariate \(H_n(A,W)\) differs across target

parameters; in this case of the ATE, it is \(H_n(A,W) = \frac{A}{g_n(A \mid W)} - \frac{1-A}{1-g_n(A, W)}\), with \(g_n(A,W) = \mathbb{P}(A=1 \mid W)\) being the

estimated propensity score, so the estimator depends both on the initial fit (by

sl3) of the outcome regression (\(\overline{Q}_n\)) and of the propensity score

(\(g_n\)).

There are several robust augmentations that are used across the tlverse,

including the use of an additional layer of cross-validation to avoid

over-fitting bias (i.e., CV-TMLE) as well as approaches for more consistently

estimating several parameters simultaneously (e.g., the points on a survival

curve).

4.4.2 Statistical Inference

Since TMLE yields an asymptotically linear estimator, obtaining statistical inference is very convenient. Each TML estimator has a corresponding (efficient) influence function (often, “EIF”, for short) that describes the asymptotic distribution of the estimator. By using the estimated EIF, Wald-style inference (asymptotically correct confidence intervals) can be constructed simply by plugging into the form of the EIF our initial estimates \(\overline{Q}^{\star}_n\) and \(g_n\), then computing the sample standard error.

The following sections describe both a simple and more detailed way of

specifying and estimating a TMLE in the tlverse. In designing tmle3, we

sought to replicate as closely as possible the very general estimation framework

of TMLE, and so each theoretical object relevant to TMLE is encoded in a

corresponding software object/method. First, we will present the simple

application of tmle3 to the WASH Benefits example, and then go on to describe

the underlying objects in greater detail.

4.5 Easy-Bake Example: tmle3 for ATE

We’ll illustrate the most basic use of TMLE using the WASH Benefits data introduced earlier and estimating an average treatment effect.

4.5.2 Define the variable roles

We’ll use the common \(W\) (covariates), \(A\) (treatment/intervention), \(Y\)

(outcome) data structure. tmle3 needs to know what variables in the dataset

correspond to each of these roles. We use a list of character vectors to tell

it. We call this a “Node List” as it corresponds to the nodes in a Directed

Acyclic Graph (DAG), a way of displaying causal relationships between variables.

node_list <- list(

W = c(

"month", "aged", "sex", "momage", "momedu",

"momheight", "hfiacat", "Nlt18", "Ncomp", "watmin",

"elec", "floor", "walls", "roof", "asset_wardrobe",

"asset_table", "asset_chair", "asset_khat",

"asset_chouki", "asset_tv", "asset_refrig",

"asset_bike", "asset_moto", "asset_sewmach",

"asset_mobile"

),

A = "tr",

Y = "whz"

)4.5.3 Handle Missingness

Currently, missingness in tmle3 is handled in a fairly simple way:

- Missing covariates are median- (for continuous) or mode- (for discrete)

imputed, and additional covariates indicating imputation are generated, just

as described in the

sl3chapter. - Missing treatment variables are excluded – such observations are dropped.

- Missing outcomes are efficiently handled by the automatic calculation (and incorporation into estimators) of inverse probability of censoring weights (IPCW); this is also known as IPCW-TMLE and may be thought of as a joint intervention to remove missingness and is analogous to the procedure used with classical inverse probability weighted estimators.

These steps are implemented in the process_missing function in tmle3:

processed <- process_missing(washb_data, node_list)

washb_data <- processed$data

node_list <- processed$node_list4.5.4 Create a “Spec” Object

tmle3 is general, and allows most components of the TMLE procedure to be

specified in a modular way. However, most end-users will not be interested in

manually specifying all of these components. Therefore, tmle3 implements a

tmle3_Spec object that bundles a set of components into a specification

(“Spec”) that, with minimal additional detail, can be run by an end-user.

We’ll start with using one of the specs, and then work our way down into the

internals of tmle3.

ate_spec <- tmle_ATE(

treatment_level = "Nutrition + WSH",

control_level = "Control"

)4.5.5 Define the learners

Currently, the only other thing a user must define are the sl3 learners used

to estimate the relevant factors of the likelihood: Q and g.

This takes the form of a list of sl3 learners, one for each likelihood factor

to be estimated with sl3:

# choose base learners

lrnr_mean <- make_learner(Lrnr_mean)

lrnr_rf <- make_learner(Lrnr_ranger)

# define metalearners appropriate to data types

ls_metalearner <- make_learner(Lrnr_nnls)

mn_metalearner <- make_learner(

Lrnr_solnp, metalearner_linear_multinomial,

loss_loglik_multinomial

)

sl_Y <- Lrnr_sl$new(

learners = list(lrnr_mean, lrnr_rf),

metalearner = ls_metalearner

)

sl_A <- Lrnr_sl$new(

learners = list(lrnr_mean, lrnr_rf),

metalearner = mn_metalearner

)

learner_list <- list(A = sl_A, Y = sl_Y)Here, we use a Super Learner as defined in the previous chapter. In the future, we plan to include reasonable defaults learners.

4.5.6 Fit the TMLE

We now have everything we need to fit the tmle using tmle3:

tmle_fit <- tmle3(ate_spec, washb_data, node_list, learner_list)

print(tmle_fit)

#> A tmle3_Fit that took 1 step(s)

#> type param init_est tmle_est se

#> 1: ATE ATE[Y_{A=Nutrition + WSH}-Y_{A=Control}] -0.005231 0.00812 0.050679

#> lower upper psi_transformed lower_transformed upper_transformed

#> 1: -0.091208 0.10745 0.00812 -0.091208 0.107454.5.7 Evaluate the Estimates

We can see the summary results by printing the fit object. Alternatively, we can extra results from the summary by indexing into it:

estimates <- tmle_fit$summary$psi_transformed

print(estimates)

#> [1] 0.00812

4.6 tmle3 Components

Now that we’ve successfully used a spec to obtain a TML estimate, let’s look under the hood at the components. The spec has a number of functions that generate the objects necessary to define and fit a TMLE.

4.6.1 tmle3_task

First is, a tmle3_Task, analogous to an sl3_Task, containing the data we’re

fitting the TMLE to, as well as an NPSEM generated from the node_list

defined above, describing the variables and their relationships.

tmle_task <- ate_spec$make_tmle_task(washb_data, node_list)

tmle_task$npsem

#> $W

#> tmle3_Node: W

#> Variables: month, aged, sex, momedu, hfiacat, Nlt18, Ncomp, watmin, elec, floor, walls, roof, asset_wardrobe, asset_table, asset_chair, asset_khat, asset_chouki, asset_tv, asset_refrig, asset_bike, asset_moto, asset_sewmach, asset_mobile, momage, momheight, delta_momage, delta_momheight

#> Parents:

#>

#> $A

#> tmle3_Node: A

#> Variables: tr

#> Parents: W

#>

#> $Y

#> tmle3_Node: Y

#> Variables: whz

#> Parents: A, W4.6.2 Initial Likelihood

Next, is an object representing the likelihood, factorized according to the NPSEM described above:

initial_likelihood <- ate_spec$make_initial_likelihood(

tmle_task,

learner_list

)

print(initial_likelihood)

#> W: Lf_emp

#> A: LF_fit

#> Y: LF_fitThese components of the likelihood indicate how the factors were estimated: the

marginal distribution of \(W\) was estimated using NP-MLE, and the conditional

distributions of \(A\) and \(Y\) were estimated using sl3 fits (as defined with

the learner_list) above.

We can use this in tandem with the tmle_task object to obtain likelihood

estimates for each observation:

initial_likelihood$get_likelihoods(tmle_task)

#> W A Y

#> 1: 0.00021299 0.34925 -0.35834

#> 2: 0.00021299 0.36117 -0.93261

#> 3: 0.00021299 0.34740 -0.80873

#> 4: 0.00021299 0.34248 -0.94020

#> 5: 0.00021299 0.34134 -0.57866

#> ---

#> 4691: 0.00021299 0.23375 -0.58997

#> 4692: 0.00021299 0.23366 -0.22769

#> 4693: 0.00021299 0.22660 -0.74235

#> 4694: 0.00021299 0.28944 -0.91796

#> 4695: 0.00021299 0.19533 -1.038784.6.3 Targeted Likelihood (updater)

We also need to define a “Targeted Likelihood” object. This is a special type

of likelihood that is able to be updated using an tmle3_Update object. This

object defines the update strategy (e.g., submodel, loss function, CV-TMLE or

not).

targeted_likelihood <- Targeted_Likelihood$new(initial_likelihood)When constructing the targeted likelihood, you can specify different update

options. See the documentation for tmle3_Update for details of the different

options. For example, you can disable CV-TMLE (the default in tmle3) as

follows:

targeted_likelihood_no_cv <-

Targeted_Likelihood$new(initial_likelihood,

updater = list(cvtmle = FALSE)

)4.6.4 Parameter Mapping

Finally, we need to define the parameters of interest. Here, the spec defines a single parameter, the ATE. In the next section, we’ll see how to add additional parameters.

tmle_params <- ate_spec$make_params(tmle_task, targeted_likelihood)

print(tmle_params)

#> [[1]]

#> Param_ATE: ATE[Y_{A=Nutrition + WSH}-Y_{A=Control}]4.6.5 Putting it all together

Having used the spec to manually generate all these components, we can now

manually fit a tmle3:

tmle_fit_manual <- fit_tmle3(

tmle_task, targeted_likelihood, tmle_params,

targeted_likelihood$updater

)

print(tmle_fit_manual)

#> A tmle3_Fit that took 1 step(s)

#> type param init_est tmle_est se

#> 1: ATE ATE[Y_{A=Nutrition + WSH}-Y_{A=Control}] -0.0045451 0.01174 0.050807

#> lower upper psi_transformed lower_transformed upper_transformed

#> 1: -0.08784 0.11132 0.01174 -0.08784 0.11132The result is equivalent to fitting using the tmle3 function as above.

4.7 Fitting tmle3 with multiple parameters

Above, we fit a tmle3 with just one parameter. tmle3 also supports fitting

multiple parameters simultaneously. To illustrate this, we’ll use the

tmle_TSM_all spec:

tsm_spec <- tmle_TSM_all()

targeted_likelihood <- Targeted_Likelihood$new(initial_likelihood)

all_tsm_params <- tsm_spec$make_params(tmle_task, targeted_likelihood)

print(all_tsm_params)

#> [[1]]

#> Param_TSM: E[Y_{A=Control}]

#>

#> [[2]]

#> Param_TSM: E[Y_{A=Handwashing}]

#>

#> [[3]]

#> Param_TSM: E[Y_{A=Nutrition}]

#>

#> [[4]]

#> Param_TSM: E[Y_{A=Nutrition + WSH}]

#>

#> [[5]]

#> Param_TSM: E[Y_{A=Sanitation}]

#>

#> [[6]]

#> Param_TSM: E[Y_{A=WSH}]

#>

#> [[7]]

#> Param_TSM: E[Y_{A=Water}]This spec generates a Treatment Specific Mean (TSM) for each level of the exposure variable. Note that we must first generate a new targeted likelihood, as the old one was targeted to the ATE. However, we can recycle the initial likelihood we fit above, saving us a super learner step.

4.7.1 Delta Method

We can also define parameters based on Delta Method Transformations of other parameters. For instance, we can estimate a ATE using the delta method and two of the above TSM parameters:

ate_param <- define_param(

Param_delta, targeted_likelihood,

delta_param_ATE,

list(all_tsm_params[[1]], all_tsm_params[[4]])

)

print(ate_param)

#> Param_delta: E[Y_{A=Nutrition + WSH}] - E[Y_{A=Control}]This can similarly be used to estimate other derived parameters like Relative Risks, and Population Attributable Risks

4.7.2 Fit

We can now fit a TMLE simultaneously for all TSM parameters, as well as the above defined ATE parameter

all_params <- c(all_tsm_params, ate_param)

tmle_fit_multiparam <- fit_tmle3(

tmle_task, targeted_likelihood, all_params,

targeted_likelihood$updater

)

print(tmle_fit_multiparam)

#> A tmle3_Fit that took 1 step(s)

#> type param init_est tmle_est

#> 1: TSM E[Y_{A=Control}] -0.5959678 -0.620830

#> 2: TSM E[Y_{A=Handwashing}] -0.6188184 -0.660230

#> 3: TSM E[Y_{A=Nutrition}] -0.6111402 -0.606586

#> 4: TSM E[Y_{A=Nutrition + WSH}] -0.6005128 -0.608949

#> 5: TSM E[Y_{A=Sanitation}] -0.5857464 -0.578472

#> 6: TSM E[Y_{A=WSH}] -0.5205610 -0.448252

#> 7: TSM E[Y_{A=Water}] -0.5657364 -0.537709

#> 8: ATE E[Y_{A=Nutrition + WSH}] - E[Y_{A=Control}] -0.0045451 0.011881

#> se lower upper psi_transformed lower_transformed

#> 1: 0.029901 -0.679435 -0.56223 -0.620830 -0.679435

#> 2: 0.041719 -0.741998 -0.57846 -0.660230 -0.741998

#> 3: 0.042047 -0.688996 -0.52418 -0.606586 -0.688996

#> 4: 0.041285 -0.689867 -0.52803 -0.608949 -0.689867

#> 5: 0.042396 -0.661566 -0.49538 -0.578472 -0.661566

#> 6: 0.045506 -0.537442 -0.35906 -0.448252 -0.537442

#> 7: 0.039253 -0.614644 -0.46077 -0.537709 -0.614644

#> 8: 0.050801 -0.087688 0.11145 0.011881 -0.087688

#> upper_transformed

#> 1: -0.56223

#> 2: -0.57846

#> 3: -0.52418

#> 4: -0.52803

#> 5: -0.49538

#> 6: -0.35906

#> 7: -0.46077

#> 8: 0.111454.8 Exercises

4.8.1 Estimation of the ATE with tmle3

Follow the steps below to estimate an average treatment effect using data from

the Collaborative Perinatal Project (CPP), available in the sl3 package. To

simplify this example, we define a binary intervention variable, parity01 –

an indicator of having one or more children before the current child and a

binary outcome, haz01 – an indicator of having an above average height for

age.

# load the data set

data(cpp)

cpp <- cpp %>%

as_tibble() %>%

dplyr::filter(!is.na(haz)) %>%

mutate(

parity01 = as.numeric(parity > 0),

haz01 = as.numeric(haz > 0)

)- Define the variable roles \((W,A,Y)\) by creating a list of these nodes.

Include the following baseline covariates in \(W\):

apgar1,apgar5,gagebrth,mage,meducyrs,sexn. Both \(A\) and \(Y\) are specified above. - Define a

tmle3_Specobject for the ATE,tmle_ATE(). - Using the same base learning libraries defined above, specify

sl3base learners for estimation of \(\overline{Q}_0 = \mathbb{E}_0(Y \mid A,Y)\) and \(g_0 = \mathbb{P}(A = 1 \mid W)\). - Define the metalearner like below.

metalearner <- make_learner(

Lrnr_solnp,

loss_function = loss_loglik_binomial,

learner_function = metalearner_logistic_binomial

)- Define one super learner for estimating \(\overline{Q}_0\) and another for estimating \(g_0\). Use the metalearner above for both super learners.

- Create a list of the two super learners defined in the step above and call

this object

learner_list. The list names should beA(defining the super learner for estimation of \(g_0\)) andY(defining the super learner for estimation of \(\overline{Q}_0\)). - Fit the TMLE with the

tmle3function by specifying (1) thetmle3_Spec, which we defined in Step 2; (2) the data; (3) the list of nodes, which we specified in Step 1; and (4) the list of super learners for estimation of \(g_0\) and \(\overline{Q}_0\), which we defined in Step 6. Note: Like before, you will need to explicitly make a copy of the data (to work arounddata.tableoptimizations), e.g., (cpp2 <- data.table::copy(cpp)), then use thecpp2data going forward.

4.8.2 Estimation of Strata-Specific ATEs with tmle3

For this exercise, we will work with a random sample of 5,000 patients who

participated in the International Stroke Trial (IST). This data is described in

the Chapter 3.2 of the tlverse handbook. We included the data below

and a summarized description that is relevant for this exercise.

The outcome, \(Y\), indicates recurrent ischemic stroke within 14 days after

randomization (DRSISC); the treatment of interest, \(A\), is the randomized

aspirin vs. no aspirin treatment allocation (RXASP in ist); and the

adjustment set, \(W\), consists simply of other variables measured at baseline. In

this data, the outcome is occasionally missing, but there is no need to create a

variable indicating this missingness (such as \(\Delta\)) for analyses in the

tlverse, since the missingness is automatically detected when NA are present

in the outcome. Covariates with missing values (RATRIAL, RASP3 and RHEP24)

have already been imputed. Additional covariates were created

(MISSING_RATRIAL_RASP3 and MISSING_RHEP24), which indicate whether or not

the covariate was imputed. The missingness was identical for RATRIAL and

RASP3, which is why only one covariate indicating imputation for these two

covariates was created.

- Estimate the average effect of randomized asprin treatment (

RXASP= 1) on recurrent ischemic stroke. Even though the missingness mechanism on \(Y\), \(\Delta\), does not need to be specified in the node list, it does still need to be accounted for in the TMLE. In other words, for this estimation problem, \(\Delta\) is a relevant factor of the likelihood. Thus, when defining the list ofsl3learners for each likelihood factor, be sure to include a list of learners for estimation of \(\Delta\), saysl_Delta, and specify this in the learner list, like solearner_list <- list(A = sl_A, delta_Y = sl_Delta, Y = sl_Y). - Recall that this RCT was conducted internationally. Suposse there is concern

that the dose of asprin may have varied across geographical regions, and an

average across all geographical regions may not be warranted. Calculate the

strata specific ATEs according to geographical region (

REGION).

4.9 Summary

tmle3 is a general purpose framework for generating TML estimates. The easiest

way to use it is to use a predefined spec, allowing you to just fill in the

blanks for the data, variable roles, and sl3 learners. However, digging under

the hood allows users to specify a wide range of TMLEs. In the next sections,

we’ll see how this framework can be used to estimate advanced parameters such as

optimal treatments and stochastic shift interventions.